Tired of bad AI responses? Here's how to fix them for good

Better questions, better results—find out how top PMs use AI to work smarter, not harder

This article is sponsored by - AI Empowered PM bootcamp.

Ready to get 10 hours of your week back?

The AI Empowered Product Manager bootcamp - the simplest and fastest way to acquire all the skills you need to master AI and supercharge your productivity.

Next cohort starts 2/11. Want to join us?

AI is transforming PM workflows - but only if you know how to ask questions right.

Imagine this: You sit down to brainstorm product requirements, synthesize user feedback, or draft GTM materials using AI. The potential to save hours of work is incredible.

But then, the results come in—generic, irrelevant, or just plain wrong. Frustrating, right?

Here’s the truth: The quality of AI’s responses directly impacts your productivity, decision-making, and ultimately, your success as a PM.

Great output starts with great input - it all comes down to how you ask.

In this article, I’ll show you:

How most PMs ask questions (and why it doesn’t work)

How top PMs ask questions

The secret to generating higher-quality results with the 5 Keys framework

Beyond prompt engineering

How most PMs ask questions (and why it doesn’t work)

Let’s take a simple PM task: generating user stories.

Here’s how most PMs ask the LLM (true story):

Please generate 10 user stories for a <product / feature> At first glance, it seems like a straightforward request.

But the problem with asking the question this way is that you end up with vague, generic, and irrelevant responses.

For example, here are the user stories generated for a digital onboarding experience for home mortgages.

You can view the entire chat thread here.

The reason they are all over the place, is because of:

Lack of Context: The prompt provides minimal detail about the product, user, or goals. The only input is "digital onboarding experience for credit cards," forcing the AI to make broad assumptions.

Insufficient Instructions: The instruction, "generate 10 user stories," is too vague. It lacks guidance on user story best practices, level of detail, technical and implementation guidelines, etc.

No Training Data: AI performs best when you provide clear expectations—typically by specifying a format or offering examples. Without this structure, the AI is left to guess, often resulting in irrelevant outputs.

The result? You spend more time refining the output, making AI a time sink rather than a time saver

Fortunately, asking better questions is easier than you think.

How top PMs ask questions

Better questions mean better results—here’s how top PMs do it.

Let’s stick with the same task: generating user stories for a digital onboarding experience for home mortgages. And let’s provide more details.

Here’s how a top PM asks:

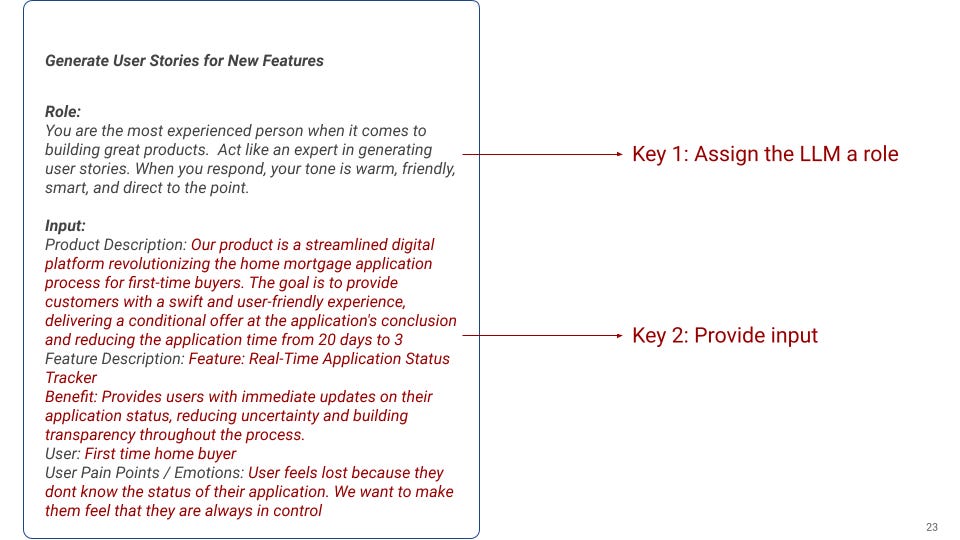

Role:

You are the most experienced person when it comes to building great products. Act like an expert in generating user stories. When you respond, your tone is warm, friendly, smart, and direct to the point.

Input:

Product Description: <Brief desription of the product, probelm, usr benefit, and desired outcome>

User: <Brief description of the user, recommend use only 1 user at a time>

User Pain Points / Emotions: <Brief description of the user pain or emotional needs>

Feature Description: <Brief description of the feature and how the user will benefit from it>

Instructions:

Generate a list of 10 unique user stories.

Make sure the user story is independent and can be developed and tested separately.

The user stories must be detailed, prioritize user needs and benefits.

Maintain a user-centric perspective at all times. Avoid technical jargon and implementation details.

Provide only the list of user stories.

Format:

For your response, please provide the user story using the following format:

As a [user], I want [goal] so that [benefit].

Good Example:

As a sales person, I want to use built-in RFP templates, so I can quickly create responses and save time

Now look at the results, when you use a well structured prompt:

You can view the entire chat here.

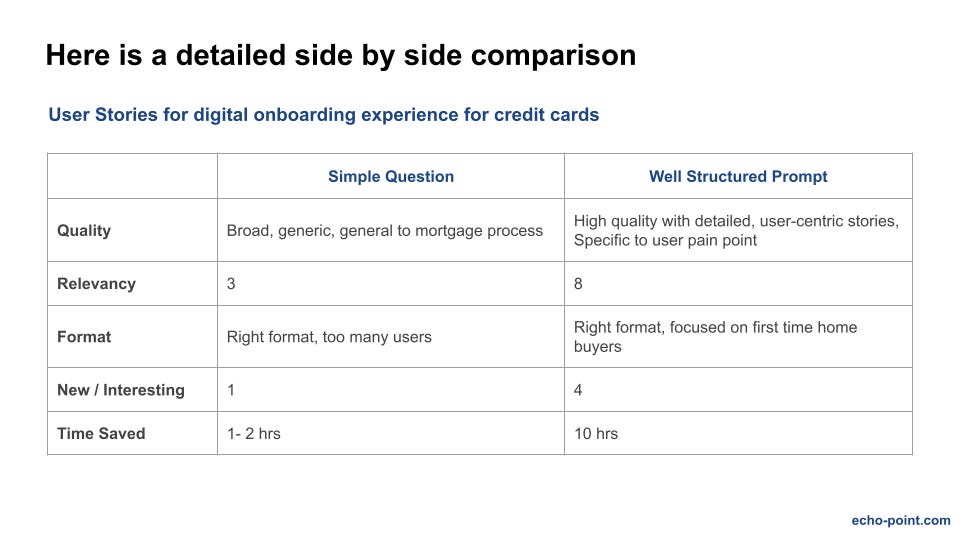

When you compare these results side by side, the difference is striking:

These responses work because the prompt gives the AI exactly what it needs.

So what’s their secret to generating higher-quality responses?

It’s all about applying the 5 Keys Framework—a simple, practical approach to crafting better prompts.

Assign It a Role: Tell the AI who it’s supposed to be. Giving it a persona helps align its tone and expertise with your needs.

Provide the Right Input: The AI can only work with what you give it. Supplying detailed, relevant context—like documents, URLs, or background information—sets it up for success.

Give Specific Instructions: Be clear about what you want. Vague prompts lead to vague answers, but specific instructions guide the AI toward meaningful results.

Specify the Format: Make it easy to use the output. Whether it’s bullet points, a table, or a narrative, telling the AI how to structure its response ensures the results are actionable.

Provide Examples: Show the AI what success looks like. A single example can go a long way in clarifying your expectations.

Here’s the 5 Keys Framework applied to our User Story prompt

Following these five keys ensures you get clear, actionable, and high-quality results from AI.

What comes after prompt engineering? Unlocking even higher-quality results.

Better prompts are just the beginning—if you want to maximize AI’s potential, you need to go further.

Crafting better prompts with the 5 Keys Framework will dramatically improve the quality of your results. But if you’re looking to unlock the full power of large language models (LLMs), there’s more to it.

To truly elevate your AI game, you need to master additional skills and techniques:

Adopt an AI-First Mindset: Integrate AI into your workflow by actively identifying opportunities to leverage it.

Pick the Right LLM: Choose the model best suited for your task to ensure alignment with your goals.

Foster Human-AI Synergy: Know when to rely on AI and when human expertise is essential to maintain quality.

Break Down Micro Tasks: Deconstruct complex tasks into smaller, focused objectives for better results.

Personalization: Tailor prompts and workflows to your specific use case for greater relevance and accuracy.

Create a Prompt Library: Save time and ensure consistency by building a set of reusable, tested prompts for recurring tasks.

Evaluate and Refine: Review AI outputs and refine them to ensure they meet your standards.

Measure Results: Track metrics like time saved and workflow improvements to quantify AI’s impact.

Experiment Constantly: Regularly test new tools, prompts, and workflows to stay ahead.

Consider Ethics and Bias: Stay mindful of risks, biases, and unintended consequences in AI-generated outputs.

Use PM-Specific Tools: Explore platforms that integrate AI into product management processes.

By mastering these skills, you’ll transform AI from a helpful tool into your most powerful productivity partner.